The Importance of Transparency in AI and LLMs: Unveiling the Black Box

Discover why transparency in AI and LLMs is essential for building trust, promoting fairness, and ensuring ethical decision-making.

Role Of AI In the Modern World

Artificial Intelligence (AI) and Language Models (LLMs) are becoming increasingly omnipresent in our lives. They are being used in a wide range of applications, from virtual assistants like Siri and Alexa to self-driving cars, medical diagnosis, and financial analysis.

They have revolutionized how businesses and organizations operate. They have the ability to perform complex tasks that were once only possible for humans, such as image recognition, speech recognition, and natural language processing. These models have brought about numerous advancements and have the potential to drive innovation and growth in various fields.

However, AI and LLMs have a significant drawback – their decision-making processes, which include:

- Data collection

- Data preprocessing

- Model selection

- Training the model

- Validation

- Testing

- Decision-making

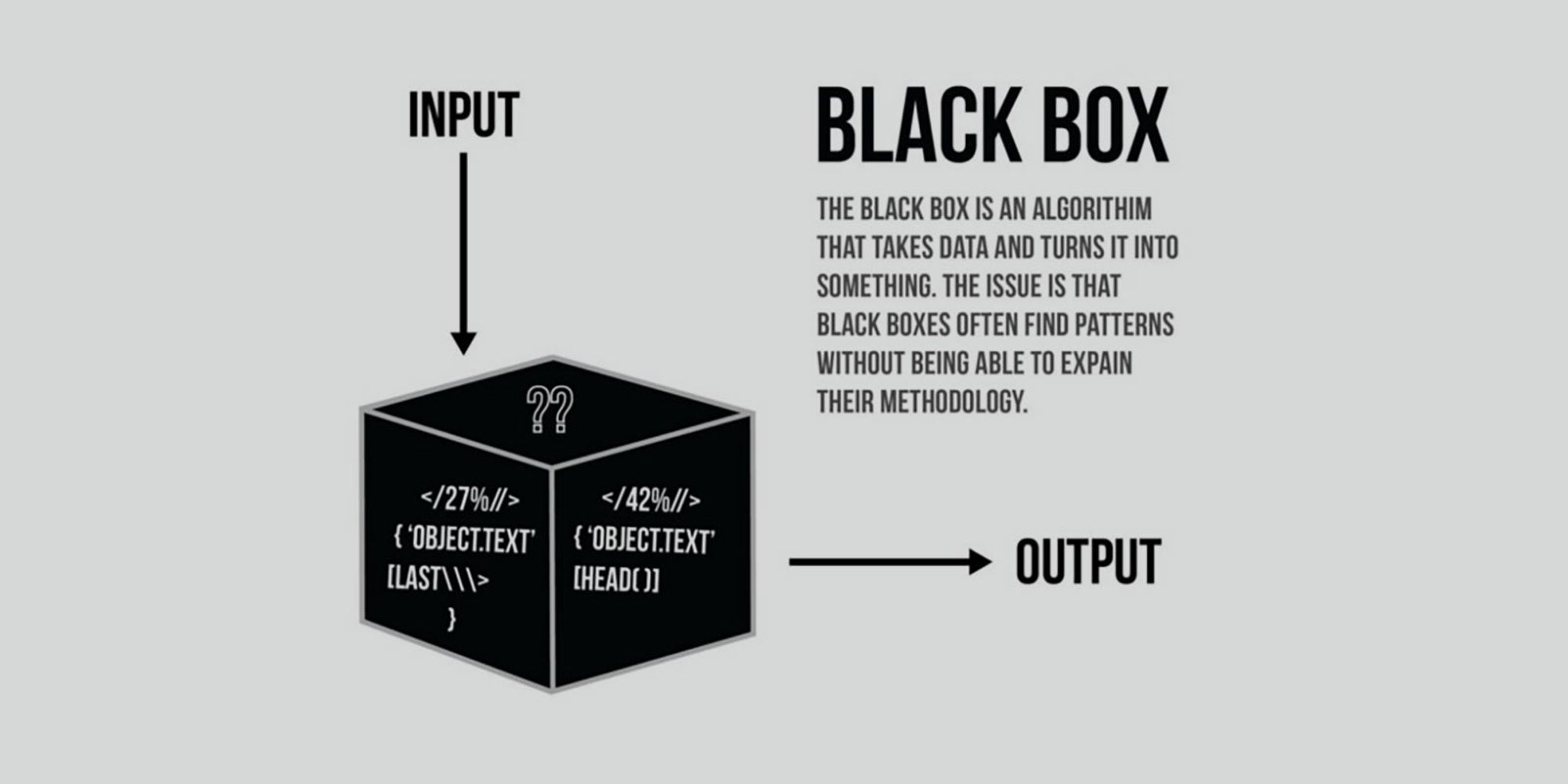

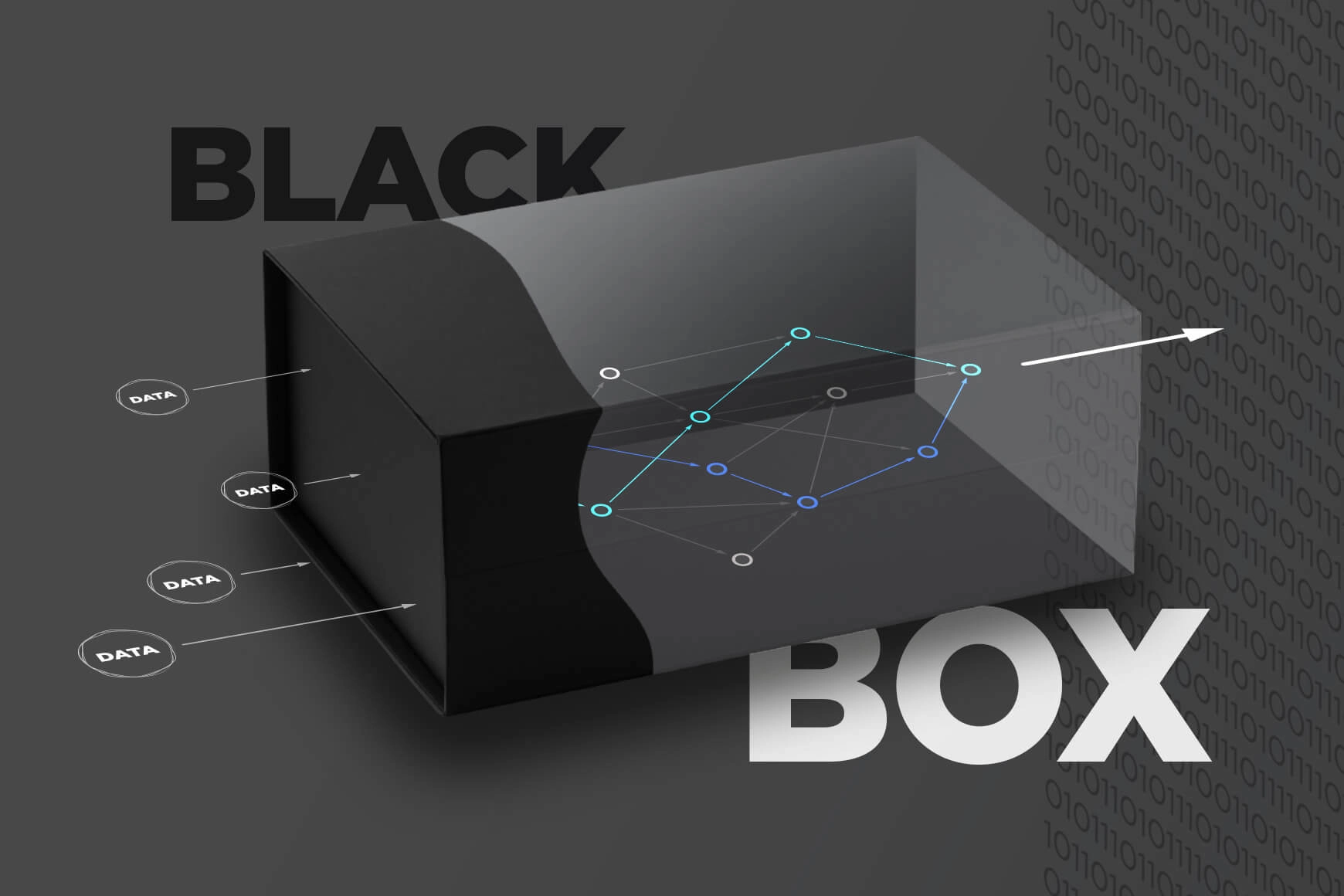

These are often viewed as "black boxes" because they lack transparency. This means that it is often difficult to understand how a particular decision was reached by an AI or LLM.

Black box

A black box model is a type of model where the relationship between the input and output is opaque or not easily interpretable. This means that while the model may be able to accurately predict outcomes based on input data, it may not be clear how or why it arrived at those predictions. This lack of transparency can be problematic in some situations, especially if the predictions are being used to make important decisions that affect people's lives.

Importance of Transparency in AI and LLMs:

Transparency is a critical aspect of artificial intelligence (AI) because it helps to ensure that AI systems are trustworthy, ethical, and fair.

Transparency is also important for building trust in AI and LLMs. When the decision-making process is transparent, it is easier for stakeholders to understand how the model works and to identify any potential issues. This can lead to greater acceptance and adoption of AI and LLMs.

The following are some of the key reasons why transparency is important in AI:

- Explainability: Transparency enables us to understand how AI systems work and how they arrive at their decisions. This is particularly important when AI systems are used to make decisions that affect people's lives, such as in healthcare, finance, and criminal justice. Explainability allows us to ensure that these decisions are fair, unbiased, and consistent with ethical and legal norms.

- Accountability: Transparency helps to hold AI systems accountable for their actions. When the inner workings of AI systems are transparent, it is easier to identify and correct errors, biases, and other problems. This is important for building trust in AI systems and ensuring that they are used responsibly.

- Trust: Transparency is essential for building trust in AI systems. When people understand how AI systems work and how they arrive at their decisions, they are more likely to trust them. This is particularly important in applications where AI is used to make decisions that affect people's lives, such as healthcare, finance, and criminal justice.

- Safety: Transparency is also important for ensuring the safety of AI systems. When the inner workings of AI systems are transparent, it is easier to identify potential risks and ensure that appropriate safeguards are in place.

Consequences Of Lack Of Transparency

- The lack of transparency in AI and LLMs can have serious consequences, such as:

- Legal and ethical issues

- Discrimination and bias

- Trust and Adoption

- Cybersecurity and privacy

- Lack of accountability

- Decreased trust

- Safety risks

For example, if an AI model is used to make decisions about creditworthiness, employment, or any other, and its decision-making process is not transparent, it can lead to unjust outcomes. Furthermore, if an AI model is biased, its lack of transparency can make it difficult to identify and correct that bias.

Solving “Black Box” Riddle

To address these issues, it is important to increase the transparency of AI and LLMs.

- One way to do this is to make the decision-making processes of these models more interpretable. This can be done using techniques such as feature importance analysis, partial dependence plots, and LIME (local interpretable model-agnostic explanations) to explain how the model arrived at a particular decision.

- Another way to increase transparency is to make the data used to train the AI and LLMs more transparent. This can be done by providing access to the data used to train the model, as well as information about how the data was collected, pre-processed, and labeled. This information can help stakeholders understand how the model works and how it arrived at its decision.

To address these issues, there is a growing call for transparency in AI and LLMs. This means opening up the "black box" and making the inner workings of these systems more understandable and interpretable. There are several ways to achieve this, such as:

- Algorithmic transparency: This involves providing details on how the algorithms used in AI systems work, including how they make decisions and what data they use.

2. Data transparency: This involves making the data used to train and test AI systems publicly available so that researchers and users can understand the biases and limitations of the system.

3. Explainability: This involves providing explanations for how AI systems arrived at their decisions or recommendations so that users can understand and trust the system.

4. Auditing and accountability: This involves providing mechanisms for auditing and accountability, so that errors or biases can be identified and corrected, and responsibility can be assigned.

5. Open source: This involves making the source code for the AI system publicly available. This can help to build trust in the system by allowing users to see how it works and potentially identify any issues or areas for improvement.

6. Certification and standards: This involves developing certification and standards for AI systems, such as the IEEE Global Initiative for Ethical Considerations in AI and Autonomous Systems. These can provide guidelines and frameworks for ensuring transparency, accountability, and fairness in AI and LLMs.

7. Collaborative research: Collaborating with researchers and academics to better understand the implications of AI and LLMs. This can help to identify areas where transparency is needed and develop solutions to improve transparency.

8. User education: Educating users on how AI and LLMs work and how to interpret their decisions. This can help to build trust in the system by empowering users to understand how it arrived at its decision and identify potential errors or biases.

9. Ethics review: Conduct ethics reviews to ensure that AI and LLMs are being used in a responsible and ethical manner.

Overall, achieving transparency in AI and LLMs requires a multi-faceted approach that involves providing information on how the system works, making data available, providing explanations for decisions, building accountability mechanisms, potentially making the source code available, developing certification and standards, collaborating with researchers, educating users, conducting ethics reviews, and potentially other strategies depending on the specific application.

In conclusion, transparency is crucial for the responsible and ethical use of AI and LLMs. It can help to ensure that these systems are fair, trustworthy, and accountable, and can help to build trust between users and the systems they interact with. By increasing the transparency of these models, we can ensure that they are making decisions that are consistent with our values and principles. As the use of AI and LLMs continues to grow, it is essential that we prioritize transparency in their decision-making processes to ensure that they are beneficial to society and uphold ethical standards.